AI Night: Resources worth knowing about.

I am hosting an AI Night meetup tonight and wanted to create a handout for people to walk away with that helps inform them without oversimplifying what's going on in the world of AI tech. This post is a digital version of that handout. The resources listed within this handout will not be talked about in-depth at the meetup but are meant as additional resources for the curious. I will be hands-on with two specific AI models, Chat GPT and Stable Diffusion at the meetup.

Chat GPT

A Large Language Model (LLM) that was trained and which interacts in a conversational way created by a company called Open AI. The model and its weights are proprietary. Sometimes informally referred to as GPT 3.5

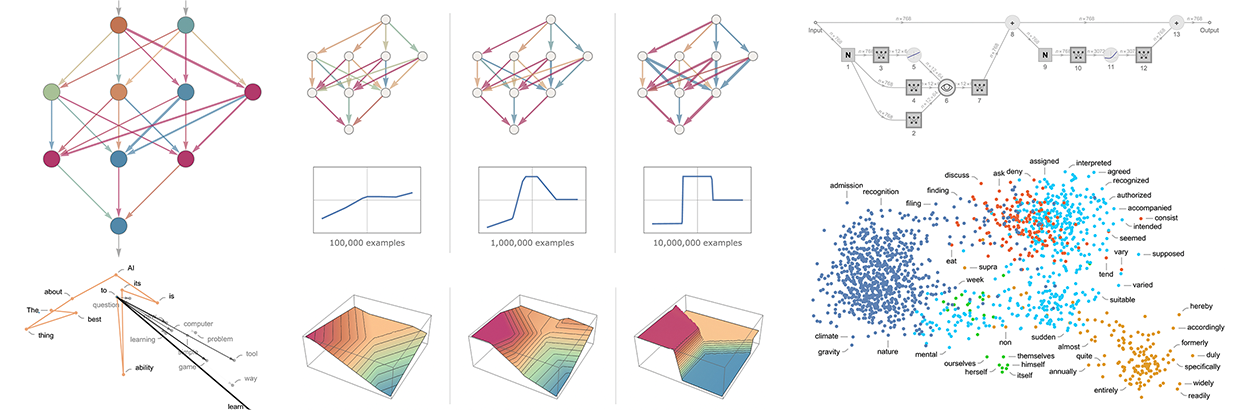

One of the best explanations of what Chat GPT is from a "how does it work?" perspective is given by Stephen Wolfram. Stephen Wolfram created WolframAlpha which in some ways shares many similarities with Chat GPT. However, WolframAlpha is mainly used to help with solving problems in mathematics. One thing people lose sight of when thinking about Chat GPT is that it is a mathematical model. I commonly tell people that AI as it exists today is just statistics on steroids. It can be misapplied just as often as statistical models can be. So the better your foundational understanding of what's going on underneath, the better you will be at using AI models. Stephen Wolfram does a good job of staying true to the underlying mathematical concepts that are happening without diving too deep into any one particular example.

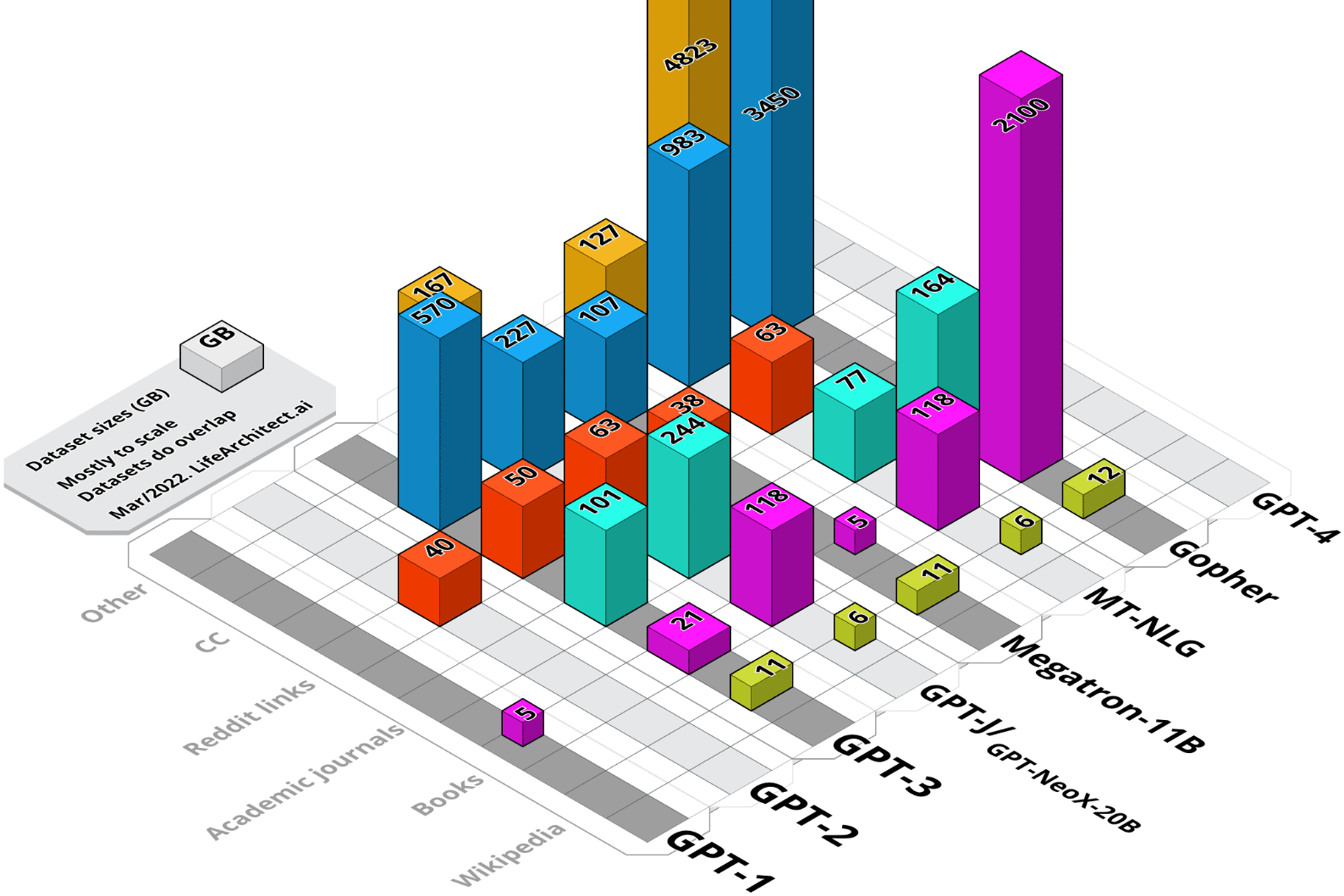

Another point worth making is that an AI model is only as good as the data within it. So much effort and sacrifice has been made in an effort to clean up data and not get awful results. I thought it was worth pointing out the resource aggregated by Dr. Alan Thompson where he shows what data is within many well-established AI models. He has a good breakdown of the data sets that go into many different AI models by varying tech companies.

How should I prompt Chat GPT?

Some techniques based on what I've observed and from what others have taught me.

I think there is a style of prompting that I will refer to as "persona prompting". Where the goal is to convince Chat GPT to speak to you in a certain way or from a certain expertise. In my mind, I usually think about how people explain what a PHD truly is.